Trying the Sarvam-M 24B Indic LLM

Sarvam AI has released its very first Hybrid Indic LLM called Sarvam-M 24b built on top of the Mistral-Small model (hence the M in the name). It's a text-only model trained on all major Indian languages. You can run it locally on your computer as it's open-source, use on the playground, and also available via API.

I came to know about the launch from X and there are mixed opinions about the launch. But I am going to try it anyway and document my learnings in the post.

The first impression

I skimmed through the launch blog post to see the benchmark and the numbers are impressive when compared against Mistral Small 24B, Gemma 3 27B, Llama 4 Scout 17B/109B, and Llama 3.3 70B. It's claimed to perform:

- 20% better on Indian language tasks

- 21.6% better on math tasks

- 17.6% better on programming tasks

The model is trained to understand and generate text in 10 Indian languages, including Hindi, Tamil, Telugu, Malayalam, Punjabi, Odia, Gujarati, Marathi, Kannada, and Bengali.

As mentioned in the post, they used Supervised Fine-Tuning (SFT), Reinforcement Learning with Verifiable Rewards (RLVR), and Inference Optimization to train the model.

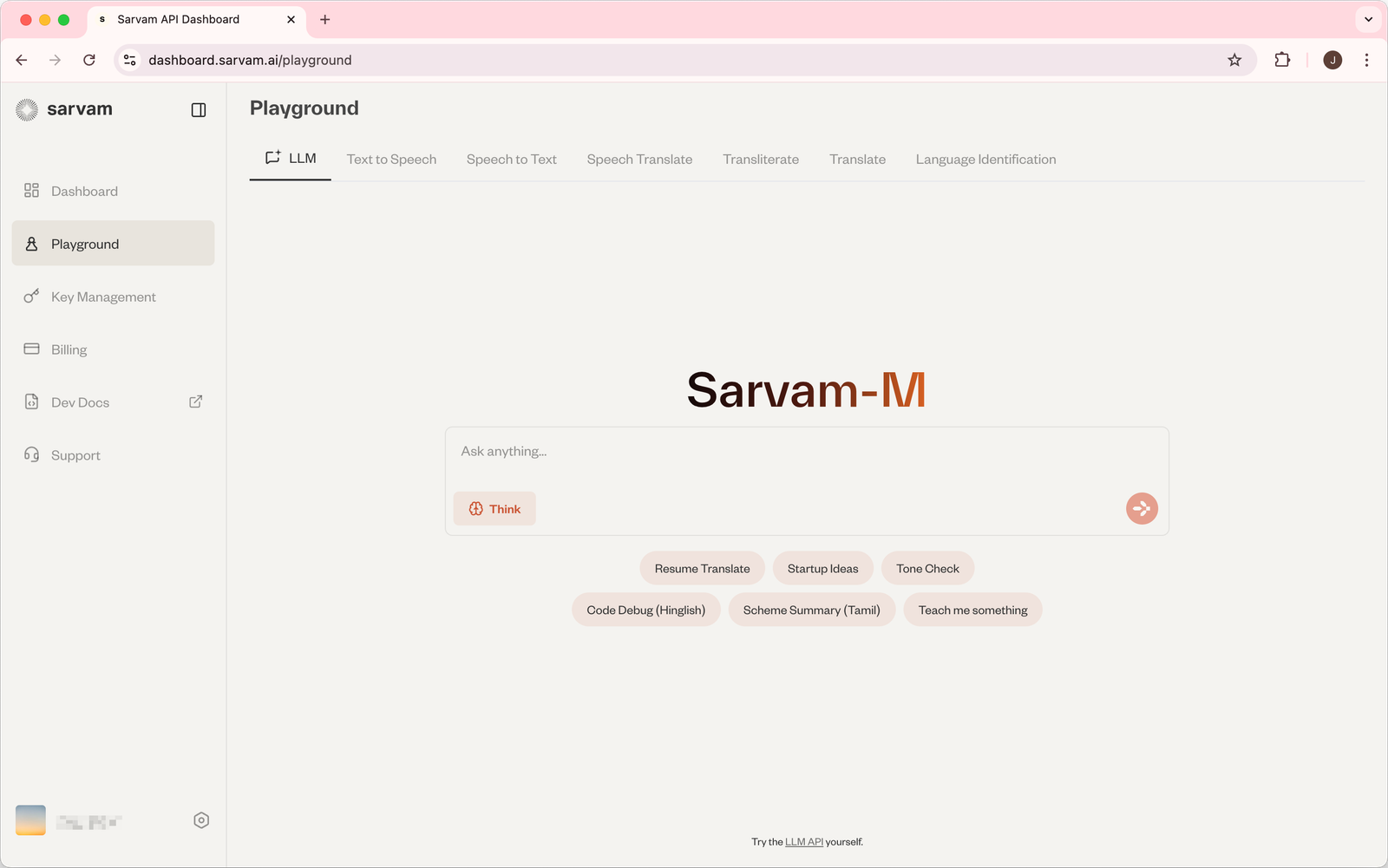

Trying the Sarvam Playground

I visited this URL to access the Playground and signed up using my Google account, and landed on playground page that looked like this.

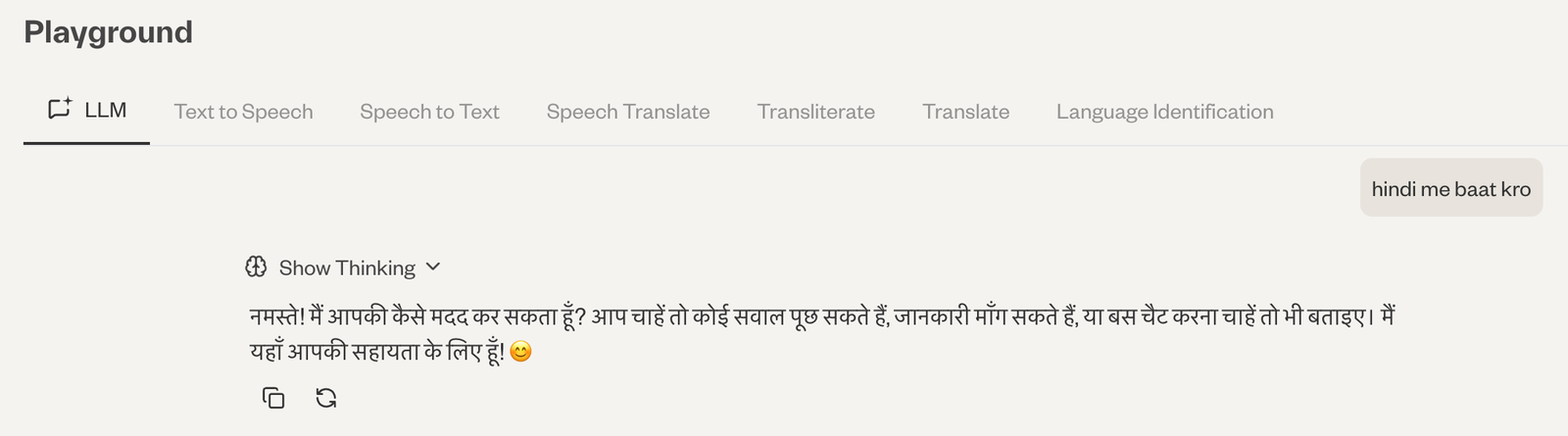

I asked it to talk to me in the Hindi language and it immediately replied to me in the language. And it was very fast, definitely faster than ChatGPT and Gemini. If you'd like, below is the exact response that it gave.

But what impressed me the most is its "Text to Speech" tool. When I clicked on the option, it preloaded a line of text in the Odia language and perfectly spoke the language when I clicked on the generate button.

To test further, I gave it the below paragraph in English, asked it to translate to Odia, and then converted it to an audio. I'm putting everything below.

The English text:

The solar system is a group of objects that move around the Sun. The Sun is at the center and is the biggest object in the system. It gives off heat and light that make life on Earth possible. There are eight main planets that go around the Sun: Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, and Neptune. These planets move in paths called orbits.

Translated Odia text:

ସୌରଜଗତ ହେଉଛି ସୂର୍ଯ୍ୟଙ୍କ ଚାରିପାଖେ ଘୂର୍ଣ୍ଣନ କରୁଥିବା ବସ୍ତୁମାନଙ୍କର ଏକ ସମୂହ। ସୂର୍ଯ୍ୟ କେନ୍ଦ୍ରରେ ଅବସ୍ଥିତ ଏବଂ ସୌରଜଗତର ସବୁଠାରୁ ବଡ଼ ବସ୍ତୁ। ଏହା ଉତ୍ତାପ ଏବଂ ଆଲୋକ ବିସ୍ତାର କରେ ଯାହା ପୃଥିବୀରେ ଜୀବନ ସମ୍ଭବ କରିଥାଏ। ସୂର୍ଯ୍ୟଙ୍କ ଚାରିପାଖେ ପରିକ୍ରମା କରୁଥିବା 8ଟି ମୁଖ୍ୟ ଗ୍ରହ ଅଛନ୍ତି: ବୁଧ, ଶୁକ୍ର, ପୃଥିବୀ, ମଙ୍ଗଳ, ବୃହସ୍ପତି, ଶନି, ଅରୁଣ, ଏବଂ ନେପ୍ଚ୍ୟୁନ। ଏହି ଗ୍ରହମାନେ କକ୍ଷପଥ ନାମକ ପଥରେ ଘୂର୍ଣ୍ଣନ କରନ୍ତି।

And the final audio:

I don't read the Odia text, but I know how to speak, and can say that the audio quality is very good. It speaks perfectly as they speak on the news channels.

I'm very impressed with the output.

Apart from this, they have other tools like Speech to Text, Speech Translate, Transliterate, Translate, and Language Identification. I tried the Speech to Text tool by uploading the same audio in Odia language, and it was able to transcribe perfectly transcribe it.

Similarly, other tools are good as well.

One problem though, when I put 2 paragraphs of text in the text-to-speech tool, it doesn't work and just says "internal server error". The error should be more descriptive.

Trying via the API

I received ₹1,000 worth of free credits when I created a new account on the platform, so I also decided to try it via the API and see how it performs.

I went through the docs and created a simple interface to try the API using HTML and JavaScript, everything is hard-coded as you can see here:

<!DOCTYPE html>

<html>

<head>

<title>Sarvam Chat</title>

</head>

<body>

<h1>Ask Sarvam</h1>

<input type="text" id="userInput" placeholder="Type your question..." style="width: 300px;">

<button onclick="callSarvam()">Send</button>

<h2>Response:</h2>

<pre id="output">Waiting for input...</pre>

<script>

async function callSarvam() {

const userMessage = document.getElementById('userInput').value;

const response = await fetch('https://api.sarvam.ai/v1/chat/completions', {

method: 'POST',

headers: {

'Authorization': 'Bearer YOUR_API_KEY_HERE',

'Content-Type': 'application/json'

},

body: JSON.stringify({

messages: [

{ role: 'system', content: "you're sarvam, an llm" },

{ role: 'user', content: userMessage }

],

model: 'sarvam-m'

})

});

if (!response.ok) {

document.getElementById('output').textContent = 'Error: ' + response.status;

return;

}

const data = await response.json();

const content = data.choices[0].message.content;

document.getElementById('output').textContent = content;

}

</script>

</body>

</html>You just need to replace YOUR_API_KEY_HERE with your actual API key, and then you can ask questions and get answers on the page. I tried asking a few questions and it did answer as it does on the playground, the text response from the API is fast as well.

Sarvam API pricing

Below is the pricing for different models in Indian Rupees (₹) as present on the pricing page.

| Category | Service | Price |

|---|---|---|

| Speech APIs | Speech to Text | ₹30 / hour |

| Speech to Text & Translate | ₹30 / hour | |

| Speech to Text with Diarization | ₹45 / hour | |

| Speech to Text, Translate & Diarization | ₹45 / hour | |

| Language Tools | Translate | ₹20 / 10K chars |

| Transliterate | ₹20 / 10K chars | |

| Language Identification | ₹3.5 / 10K chars | |

| Text to Speech | Text to Speech | ₹15 / 10K chars |

| Analytics | Call Analytics | ₹112 / hour |

I don't know why they haven't mentioned the pricing for chat completions anywhere on the pricing page, or on any page.

But... do people care about an Indic LLM?

Yes, the question is, do people of India actually need an Indic model like this? Several people like Deedy and Arpit Bhayani mention that there's not enough demand for a model like this and that's why there are only a few hundred downloads of the model on Hugging Face.

I think, main reasons for less number of downloads are:

- very fewer people care about LLMs in India as of now

- most people are end users and won't be downloading the model from Hugging Face, and

- this is not marketed well enough, people need to be made aware of what this can do

Honestly, I loved the model. If I have to make a multilingual website then I would prefer Sarvam-M to translate my content over OpenAI's or Gemini's models without any second thoughts.

🗓️ Update: June 4, 2025

The number of downloads on Hugging Face has increased to 269,186 at the time to writing this post. But I am not seeing enough buzz about Sarvam AI on social media and also on YouTube, so it's hard to say if the number is genuine or manipulated.

Overall, I liked the below things:

- ability to generate output in Indian languages

- formatting and quality of the output

- inference speed when asking questions

And a few things that can be improved:

- documentation for different endpoints

- making people aware of the model

I also recorded a video of myself trying the new Sarvam-M model via the playground and API, and have put my thoughts into it.

That's it.

I am still using and testing the model and will keep updating this page if I learn something new.

Webmentions