Upgrading Self-hosted Umami to v3 on a VPS

I recently wrote about self-hosting Umami Analytics on a small Hetzner VPS using Docker and Caddy.

Since then, Umami shipped v3 and I wanted to upgrade my existing install (v2.19.0) without losing any data. In this post, I am sharing the exact steps I followed on my live server.

If you installed Umami by following my earlier post, this guide should be a direct drop-in upgrade for you.

1. Log in to the VPS

From my local machine (macOS), I first SSHed into the VPS:

ssh root@your_server_ipThe server is running Ubuntu 24.04 with Docker CE, same as before.

After logging in I noticed a pending kernel update and a restart notice, so I rebooted first to avoid surprises in the middle of the upgrade:

rebootThen I connected again:

ssh root@your_server_ip2. Confirm the current Umami setup

Once logged in, I checked what files are in the root directory:

lsOutput:

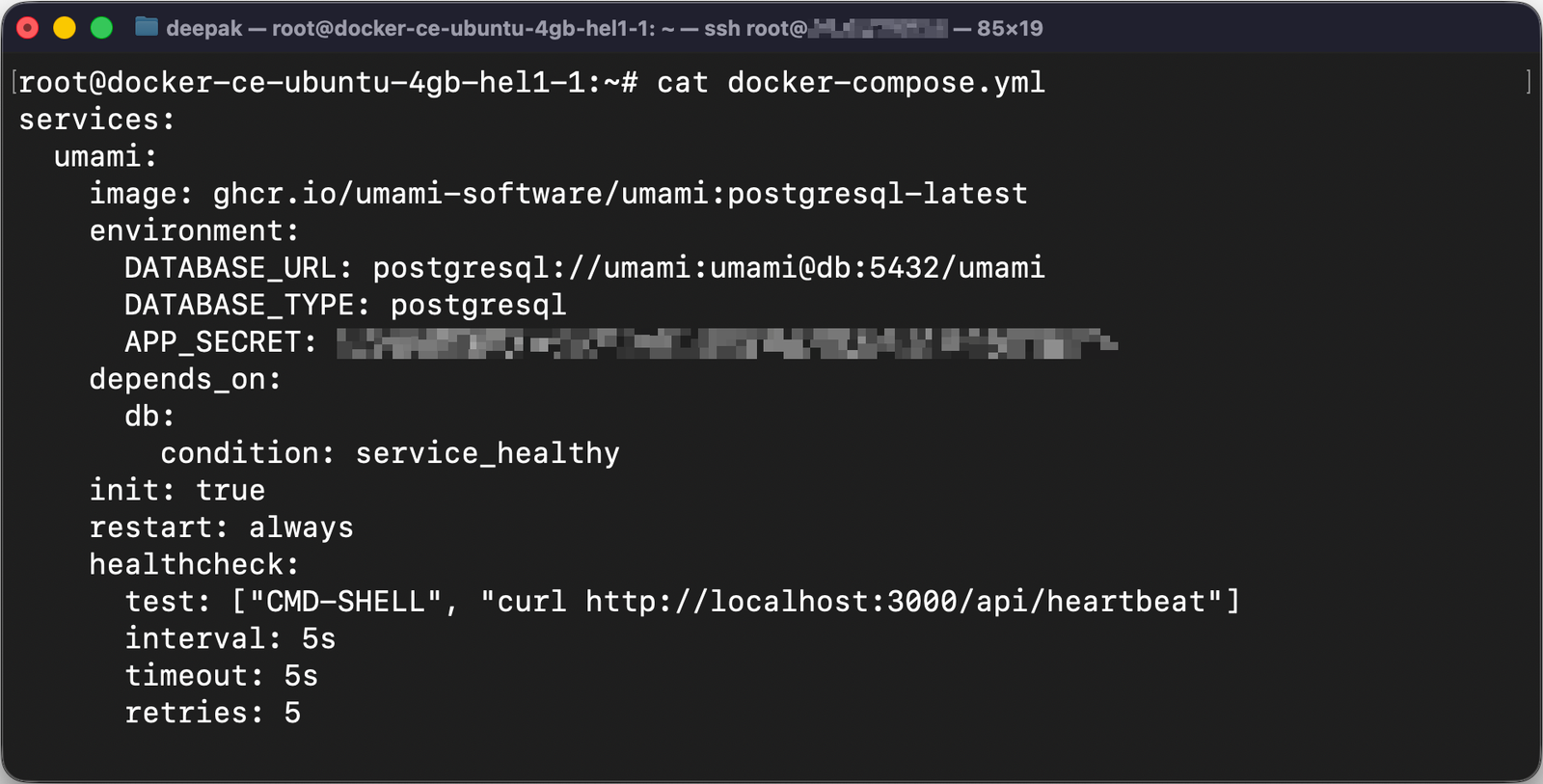

Caddyfile docker-compose.ymlSo I opened the docker-compose.yml to confirm the current setup:

cat docker-compose.ymlThe important part looks like this:

This is the same Docker setup I used in the original installation post:

umamicontainer usingpostgresql-latestimagedbcontainer runningpostgres:15-alpinecaddyin front, handling HTTPS and the domain

To make sure everything was running fine before touching anything, I checked the containers:

docker compose psSample output:

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

root-caddy-1 caddy:latest "caddy run --config …" caddy 4 weeks ago Up 2 hours 0.0.0.0:80->80/tcp, ...

root-db-1 postgres:15-alpine "docker-entrypoint.s…" db 4 weeks ago Up 2 hours (healthy) 5432/tcp

root-umami-1 ghcr.io/umami-software/umami:postgresql-latest "docker-entrypoint.s…" umami 4 weeks ago Up 2 hours (healthy) 3000/tcpAll good here.

3. Create a PostgreSQL backup (do not skip this)

Before changing any image or running migrations, I created a full dump of the Umami database.

Since the db service is using:

POSTGRES_DB=umamiPOSTGRES_USER=umami

the backup command is:

docker compose exec db pg_dump -U umami umami > umami-backup-$(date +%F-%H%M).sqlThis runs pg_dump inside the db container and saves the file on the host with a timestamp in the name.

Then I double checked that the backup file exists and has some size:

ls -lh umami-backup-*.sqlOutput on my server:

-rw-r--r-- 1 root root 8.5M Nov 19 14:48 umami-backup-2025-11-19-1448.sql8.5 MB looks right for my current traffic.

At this point, even if the migration failed, I can restore the DB using this SQL file. That gives a lot of peace of mind.

4. Update the Docker image to Umami v3.0.1

Next, I edited docker-compose.yml to point to the new v3 image.

I used nano:

nano docker-compose.ymlInside the file, in the umami service, I changed this line:

image: ghcr.io/umami-software/umami:postgresql-latestto this:

image: ghcr.io/umami-software/umami:3.0.1Nothing else needed to change – the

DATABASE_URL,DATABASE_TYPE,APP_SECRET, and thedbservice all stay the same.

After saving and closing nano (Ctrl+X, Y, Enter), I confirmed the change with:

grep -A3 'umami:' docker-compose.ymlOutput:

umami:

image: ghcr.io/umami-software/umami:3.0.1

environment:

DATABASE_URL: postgresql://umami:umami@db:5432/umamiPerfect.

5. Pull the new image

Now that the compose file points to 3.0.1, I pulled the new image:

docker compose pull umamiThis downloads the ghcr.io/umami-software/umami:3.0.1 image. My output looked like this (trimmed):

[+] Pulling 18/18

✔ umami Pulled 16.0s

✔ fc2cca81d0de Pull complete 2.4s

✔ ...

✔ 14e4cc53c863 Pull complete 14.8s Once this finished, the image was ready on the server.

6. Recreate the Umami container with v3

With the image pulled, I restarted just the umami service using the new image:

docker compose up -d --force-recreate umamiOutput:

[+] Running 2/2

✔ Container root-db-1 Healthy 0.8s

✔ Container root-umami-1 Started 1.1s Note that the db container stays the same, including the data volume. We are only replacing the app container.

7. Watch the logs and wait for migrations

The first start after the image change will run database checks and migrations. To see what is happening in real time, I tailed the logs:

docker compose logs -f umamiThe logs showed:

umami-1 | > umami@3.0.1 start-docker /app

umami-1 | > npm-run-all check-db update-tracker start-server

umami-1 |

umami-1 | > umami@3.0.1 check-db /app

umami-1 | > node scripts/check-db.js

umami-1 |

umami-1 | ✓ DATABASE_URL is defined.

umami-1 | ✓ Database connection successful.

umami-1 | ✓ Database version check successful.

umami-1 | Prisma schema loaded from prisma/schema.prisma

umami-1 | Datasource "db": PostgreSQL database "umami", schema "public" at "db:5432"

umami-1 |

umami-1 | 14 migrations found in prisma/migrations

umami-1 |

umami-1 | Applying migration `14_add_link_and_pixel`

umami-1 |

umami-1 | The following migration(s) have been applied:

umami-1 | └─ 14_add_link_and_pixel/

umami-1 | └─ migration.sql

umami-1 |

umami-1 | All migrations have been successfully applied.

umami-1 |

umami-1 | ✓ Database is up to date.

umami-1 |

umami-1 | > umami@3.0.1 start-server /app

umami-1 | > node server.js

umami-1 |

umami-1 | ▲ Next.js 15.5.3

umami-1 | - Local: http://localhost:3000

umami-1 | - Network: http://0.0.0.0:3000

umami-1 |

umami-1 | ✓ Starting...

umami-1 | ✓ Ready in 485msThis is exactly what you want to see:

- DB connection successful

- Migrations applied

- Server starting and ready

Once I saw the app was ready, I pressed Ctrl + C to stop tailing the logs.

8. Confirm that Umami v3 is live

With the container running, I opened my Umami domain in the browser (the one I pointed to Caddy in the first post).

The login page loaded as usual. After logging in:

- The dashboard still showed all the historical data

- The list of websites was intact

- New pageviews were being tracked without changing the tracking script

If something looked broken here, I still had my SQL backup file to fall back to, but in my case everything worked on the first try.

9. What to do if something breaks

If the logs show errors, or the app keeps restarting in a loop, here is a quick recovery plan:

-

Stop the stack:

docker compose down -

Bring only the database back up:

docker compose up -d db -

Restore the backup:

cat umami-backup-YYYY-MM-DD-HHMM.sql | docker compose exec -T db psql -U umami umamiReplace the file name with your backup file name.

-

Change the image back to the old one in

docker-compose.yml:image: ghcr.io/umami-software/umami:postgresql-latest -

Start Umami again:

docker compose up -d umami

You should now be back on the old version with the old data.

10. Final notes

That is pretty much all I had to do to upgrade Umami from the postgresql-latest v2 image to 3.0.1:

- One SSH session

- One

pg_dumpbackup - One image tag change

- A

pull, anup, and a quick log check

If you are running the same Docker + Caddy setup from my earlier post, you can follow the same steps line by line, just replace the IP and domain with yours.

If you try this upgrade and hit any issues, the logs and the SQL backup will be your best friends – check those first before touching anything else.

Webmentions