Launched SharePDF app – a new SaaS

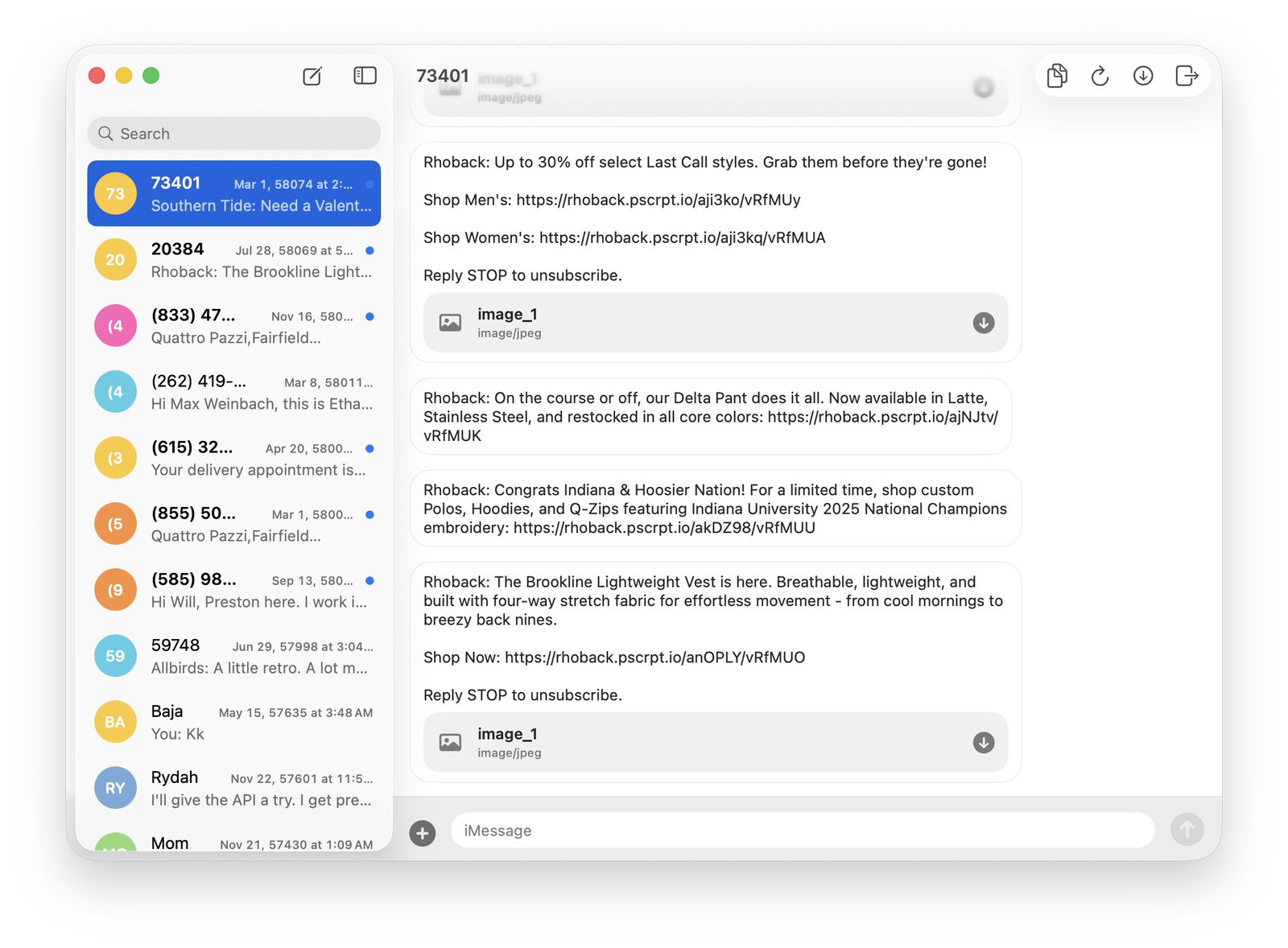

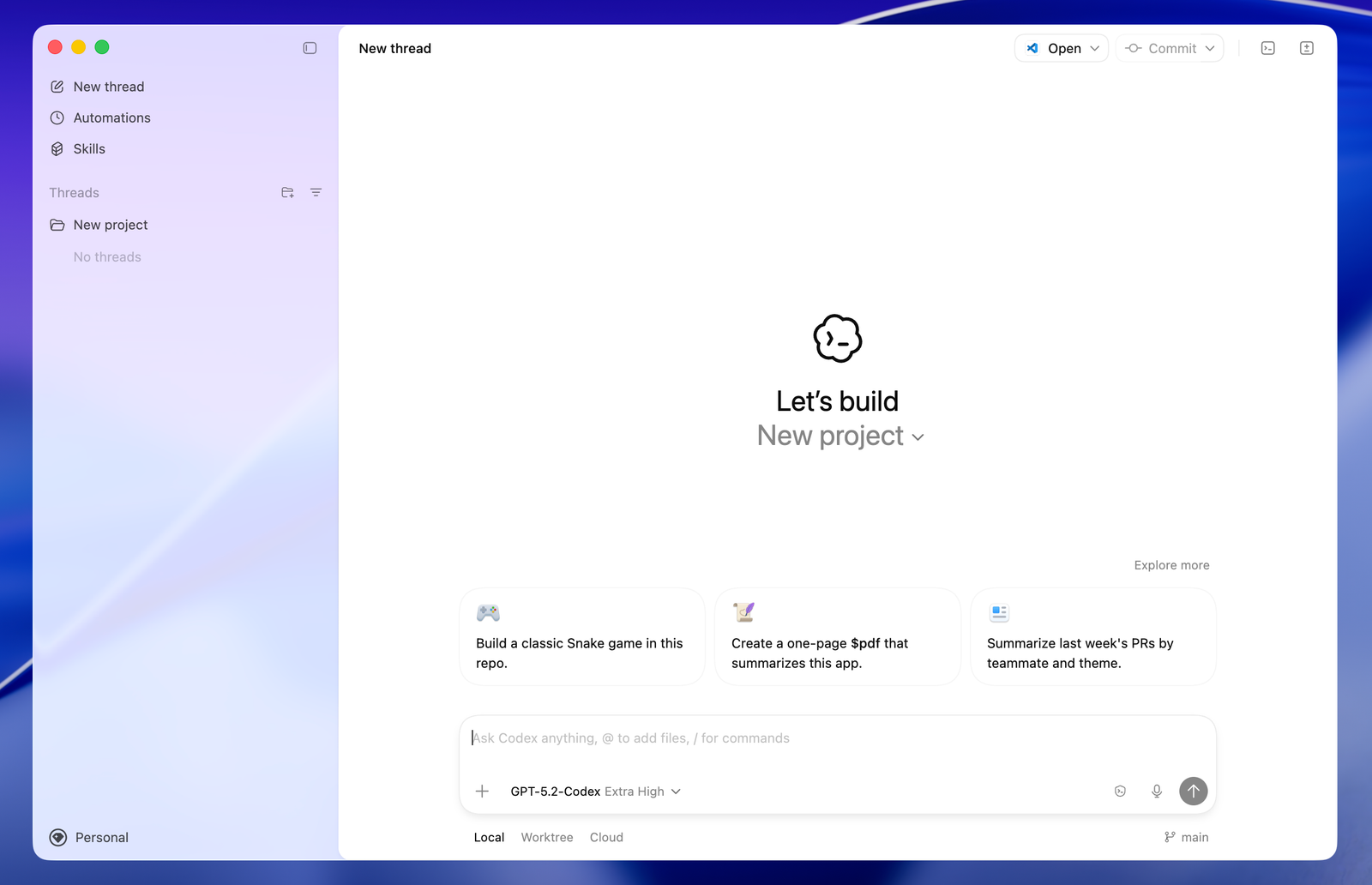

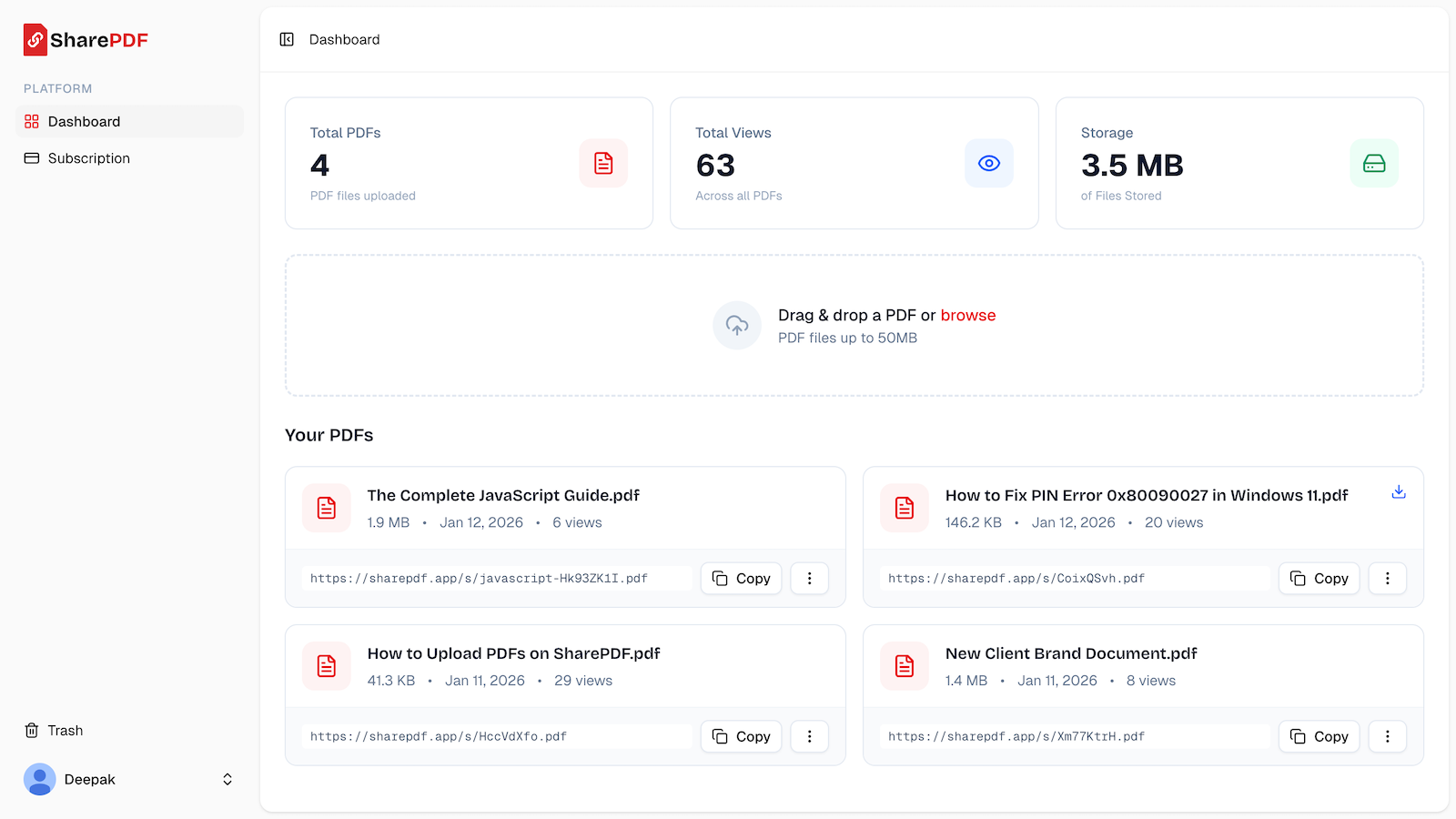

I launched a new simple SaaS app called SharePDF that helps you share PDFs online with trackable links. Just upload a PDF, get the shareable link, share anywhere online and then track views in the dashboard. Here's what the dashboard looks like:

It's a fairly simple app with features like:

- Drag-n-drop upload

- Short customizable share URLs

- Track view analytics

- Secure storage on Cloudflare R2

- Fast loading via Cloudflare CDN

- No ads on PDF URLs

- Can choose to enable/disable downloads

- Viewer doesn't need to sign up

I am still improving the features a bit and also adding a few new ones slowly. Currently, not spending a lot of time on this, but will do spend once I get 1-2 paid customers for the app.

You can also track my weblog for the app on this page on my website.